Editor’s note: This case study was originally published in 2019. It has been updated for accuracy and to reflect modern practices.

In our conversations with hundreds of eCommerce business owners, many have told us that their Google Ads campaigns aren’t performing as well as originally thought — and could be working more efficiently for a lesser cost.

Many of these brands assume that setting up their product feed and launching one Shopping campaign containing all of their products is all that it takes to create a profit.

Spoiler alert: It takes a lot more than that to create a successful eCommerce PPC strategy.

If managed correctly and implemented by pros, Google Ads can be a great revenue driver to grow your eCommerce business — but it takes more than just keywords and ad creative to bring it to fruition.

Today, we’ll show you how to make it happen, by demystifying the combination of eCommerce PPC marketing strategies that we use to get results for dozens of online businesses.

In the following case study, we’ll cover:

- The Google Ads strategies we used for a 9.89x ROAS

- The tiered bidding strategy that drives substantial Google Shopping results

- And the other PPC best practices we recommend to reduce your brand’s spend

The Real-Life Results

It was this common scenario — frustration in their subpar Google PPC campaigns — that brought this client to our doorstep.

As an online retailer in the camera equipment niche, they needed to improve their Google Search and Google Shopping campaigns for an investment return on their substantial spend.

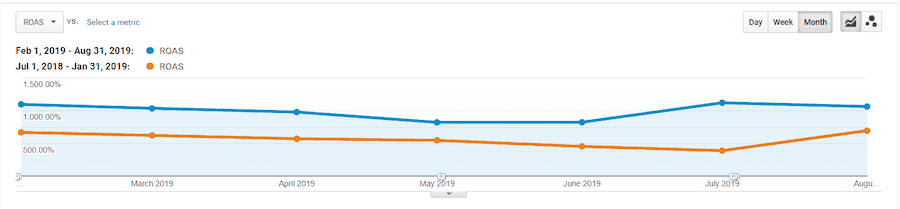

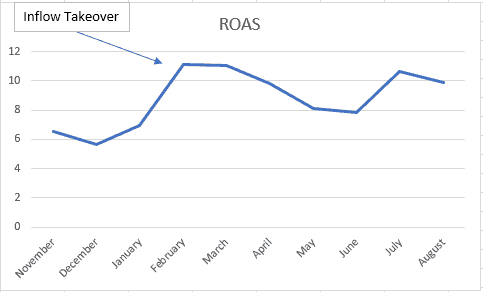

Six months after Inflow took over their PPC efforts, we helped them exceed that goal, with a 76% increase in ROAS (5.61x to 9.89x) from their previous agency’s work.

Even better: Year over year, we netted them a 56% increase in ROAS, from 6.34x to 9.89x.

So, how did we do it?

Keep reading to find out.

Our clients include not only seven- and eight-figure businesses, but nine-figure annual revenue eCommerce companies, as well. Talk to us today to see if our eCommerce marketing experts can improve your brand’s PPC advertising, search engine optimization (SEO) efforts, and conversion rates.

eCommerce PPC Strategies Implemented for a 9.89x ROAS

While every eCommerce store presents unique advantages and challenges, by working with hundreds of them, we’ve learned just how important it is to follow a system.

Before we do anything else, we always start with an audit and analysis of the client’s product feed, campaigns, and other KPIs to see what is (and isn’t) working.

For this client, their website had a high search volume and an extensive product catalog of cameras, lenses, and other camera-related equipment. But, due to the store’s scale, there was incomplete and missing information about those products in their Google product data feed. Their current PPC campaigns were also too broad given the different product categories on the site, which created wasted spend.

So, we created a plan to increase the client’s ROAS through the following steps:

- Product data feed setup and optimization

- Google Search & Shopping campaign restructure

- Google Display ad optimizations

- Implementation of successful Google campaigns into Bing

By optimizing each of the above, we positioned our client’s products to encapsulate the customer’s entire buying journey — resulting in less spend per click and more sales.

1. Product Feed Setup & Optimization

The Google product feed (found in Merchant Center) contains all of your products and their related information, including:

- Product category

- Brand

- Quantities

- Sizes

- Colors

- Materials

- And more

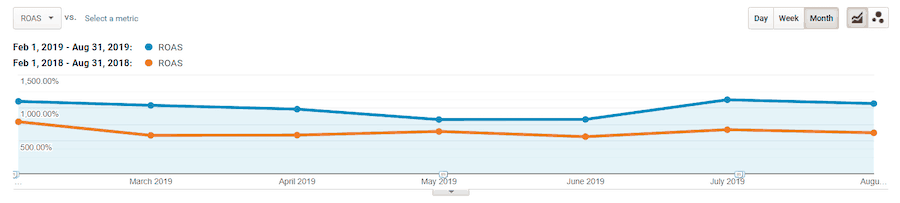

Google Shopping Ads and Display Ads pull this information directly from your store’s feed to populate its search engine results pages (SERPs). This is why optimizing your product data feed is so important.

For this client, we had to ensure that information (like camera brands, lens sizes, and so on) was all present, accurate, and easily found by Google.

Because of their massive product listings, the site had huge potential to be shown for many different specific product queries — but, due to the limited feed data, Google couldn’t match those search queries to products in the store’s catalog.

Now, when a shopper searches Google for a product in our client’s catalog (such as a specific camera lens model), our client’s product is more likely to appear right there as a Shopping ad.

Hot tip: You can easily optimize your feed using a tool like Feedonomics.

2. Shopping & Search Ad Campaign Restructures

We recommend a mixture of Google Shopping ads, Google Search ads, and Google Display ads for most big online retailers. Implementing all three often leads to enhanced product visibility across the buyer’s entire journey, from research through purchase.

So, with their product feed optimized, we began restructuring the client’s Search and Shopping campaigns.

Because this particular industry has high search volume, it makes it much easier to gain traffic to ads — but also harder to determine the specific combination of queries that drive the highest return.

To address this issue, we created multiple tiered campaigns segmented by product type. In other words, we built different tiers for different product categories — in this case, a lens tier, digital camera tier, video camera tier, film camera tier, etc.

3. Display Ad Optimizations

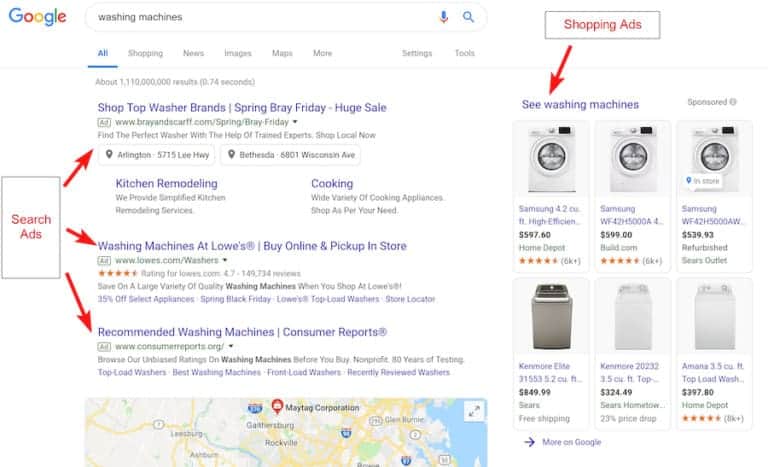

Product ads that follow customers around the web might seem annoying at first glance. But, in fact, retargeting ads convert very well and have a great ROI.

Why? Because they market to customers at the end of the buying cycle (once they’ve already visited the store and looked at product pages).

Before we came on board, shoppers were visiting our client’s store but not being remarketed to. Using Google’s dynamic remarketing feature, we automatically displayed ads to customers who left the client’s site without completing a purchase.

Dynamic remarketing uses your product feed to determine which products Google displays on its ad network. It can also intelligently group different products together based on what’s likely to convert best.

Using dynamic remarketing is a fairly straightforward strategy to skyrocket eCommerce sales and brand awareness, and we believe it’s a must for any online retailer.

Hot tip: Combine your PPC retargeting strategy with your paid social media remarketing data for cross-platform efficiency.

4. Bing Account Changes

Once we saw what was working in Google, we began transferring those changes to attempt to replicate that success in Bing Ads (now called Microsoft Advertising).

While Bing has a far lower search engine market share than Google, we’ve found that what works well in Google Ads can often work in Bing Ads, too.

So, why not duplicate the strategies that work in Google to capture shoppers from another search engine?

Of course, this exact approach may not work for every eCommerce business — but, given the lower advertising cost of Bing, it’s always worth the experiment.

Inflow’s Tiered Bidding Strategy: Implementation & Best Practices

When it comes to eCommerce PPC campaigns, patience and willingness to adjust along the way are important. Over time, you’ll identify the highest-return queries and adjust your bids to prioritize them. However, it’s an ongoing process.

In this client’s case, we identified the highest-return search queries based on this store’s different product categories — and then created a tiered campaign for each category.

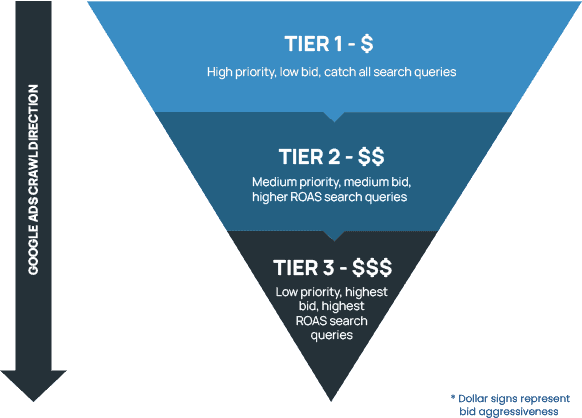

Below, you’ll find a visual representation of that tiered strategy. The search queries each tier drives are based on negative keywords. For maximum return, we created multiple tiered campaigns for each product category.

How to Establish Your Tiers

Replicate our tiered approach for your Shopping campaigns by following these steps:

1. Run Search Query reports.

Review historical performance in Google Analytics by running search query reports, going back at least six months. Identify the queries or query combinations that have driven the highest revenue or ROAS for your brand in the past.

2. Filter those queries in Google Analytics.

Identify which terms of these high-return terms have churn (and which don’t). Then, decide which ones you want to filter into Tier 2 or 3.

3. Mark any interesting patterns.

Because your business and target audience are unique, take note of any patterns or trends that pop up during your review.

For example, with this client, we saw a pattern of queries containing certain modifiers that tended to convert well. So, we identified the revenue and ROAS for those terms for more informed campaign planning.

4. Build out your findings in a spreadsheet.

Organize any found patterns in a spreadsheet list containing the search query, revenue, and ROAS numbers.

5. Segment tiers according to revenue and ROAS numbers.

Once you have all the data, it’s time to optimize your Google Shopping campaigns. For this client, we used priority settings, bid stacking, and negative keywords to create tiers.

The basic premise is to apply low, medium, and high bids on search terms with low, medium, and high returns — thus optimizing your cost-per-click (CPC). Conversely, apply high, medium, and low priority levels to low, medium, and high bids/returns.

For context, priority setting determines the order in which Google will cycle through the campaigns. High priority literally means Google takes that campaign into account first.

The Real Client Example

Here’s how this tiered system played out for this client:

Tier 1:

We placed low bids on our catch-all campaign that drives all general queries. We also added negative terms to avoid targeting queries that we wanted to bid higher on in the other tiers.

We set this tier to the “high-priority” setting.

Tier 2:

We placed medium bids on search queries we found to have a higher return than those in Tier 1 (but lower return than the queries we want to filter to Tier 3).

Like in Tier 1, we added negative keywords for the queries we wanted to filter to Tier 3. This tier was set to a medium-priority setting.

Tier 3:

Knowing what the most profitable search queries were for this client, we placed the highest bids on them. This tier was set to a low-priority setting.

Why the tiered system works: By recognizing the performance of a store’s different relevant search queries, you can have much more control over how much ad spend is allocated to each query, which was key in delivering results for this client.

Other eCommerce Google Ads Strategies to Reduce Spend

Many factors can influence the success of your eCommerce PPC strategy. Like with most digital marketing efforts, you’ll need to run some tests and continually adjust your campaigns for maximum return.

In our years of working with eCommerce sites, we’ve identified a few other best practices that drive sales with minimal spend.

As you set up your campaigns, consider incorporating these additional PPC strategies for eCommerce:

1. Always send the best-performing/highest-return queries to Tier 3.

By identifying the highest-return queries, you can ensure less wasted budget by spending the least amount of money on low-return queries and allocating the largest percentage of money to terms you’re positive will actually drive a high return.

2. Use negative targeting.

Negative keyword segmentation in a tiered system allows us to pay less for lower-returning queries. It’s also a helpful tool in regular campaigns for filtering out low-intent or irrelevant search terms.

3. Optimize by device.

After your tiers have run for enough time to gather significant data, further analyze performance by device to ensure no wasted spend.

4. Cut spend on the worst-performing hours/days of the week.

After gathering significant data, you can use a time-of-day analysis to ensure you’re optimizing for our best-performing days and hours.

For example, if your PPC ads have higher click-through rates (CTR) in the evening hours than in the early morning hours, allocate more money to the former for a better potential ROAS.

5. Launch Search competitor campaigns.

Running Search ads built around other brands’ keywords and bids allows you to target your biggest competition. (Rest assured that they’re doing this for your ads, too.)

Don’t know who your competitors are? Start with these seven Google Ads competitor research tools.

Combine this strategy with your regular search ads to maximize your audiences and make it harder for your competitors to succeed.

6. Optimize by demographics.

Run demographic-based analyses for age, gender, and household incomes of your audience. Then, implement your bid adjustments accordingly.

7. Optimize display ads to capture retargeted audience.

While bids and budget are important in your display ad campaigns, you also need to continually update your ad copy to better target shoppers at different stages in the buying funnel. For example, newer users require more brand-focused messaging and calls to action, while previous purchasers do not.

8. Embrace new ad features.

Google is continually releasing new features and capabilities within its advertising network, like its Smart Display campaigns. Don’t hesitate to test these new features as soon as they’re released!

Being the first to implement these features will often give you a competitive advantage, as other brands may be slower to adopt these new options.

Takeaways & Results

If there’s one thing to know about eCommerce PPC, it’s that it’s a dynamic advertising strategy. You’ll need to continually manage your campaigns and approach for the best results, based on what you learn from tests and past performance.

In other words, Google Ads is not a “set it and forget it” platform.

But, when you do put in the work to continually optimize and improve, the results can be well worth your time. After all, it was this ongoing adjustment to our client’s strategy that delivered a 56% increase in ROAS, year over year.

If you learn anything from this case study, I hope it’s how a Google Ads strategy based on systematic review and restructuring can quickly yield a higher ROAS for your eCommerce brand.

Want to see similar results for your business? Every eCommerce website that works with Inflow receives a customized set of strategies like these to maximize their Google Ads campaign budgets.

If you would like us to evaluate and improve your online store’s PPC marketing campaigns (or identify additional growth opportunities), request a free proposal today to see how we can help.